You could check the source to see their methodology and how they came to their results.

While that is an interesting topic, it’s only tertiarily relevant to the original point. You wanted to see some evidence and numbers on how frame rate affects latency beyond display refresh latency, and I’ve provided you a great deal of it. We aren’t anywhere close to where the diminishing returns would be inconsequential, and especially not in a title like Darktide where it’s basically impossible to get framerates consistently above 200fps without frame generation, and frame generation adds a ton of latency.

3 Likes

No you’ve sent me a video that doesn’t address what I’m debating u about. I watched the video.

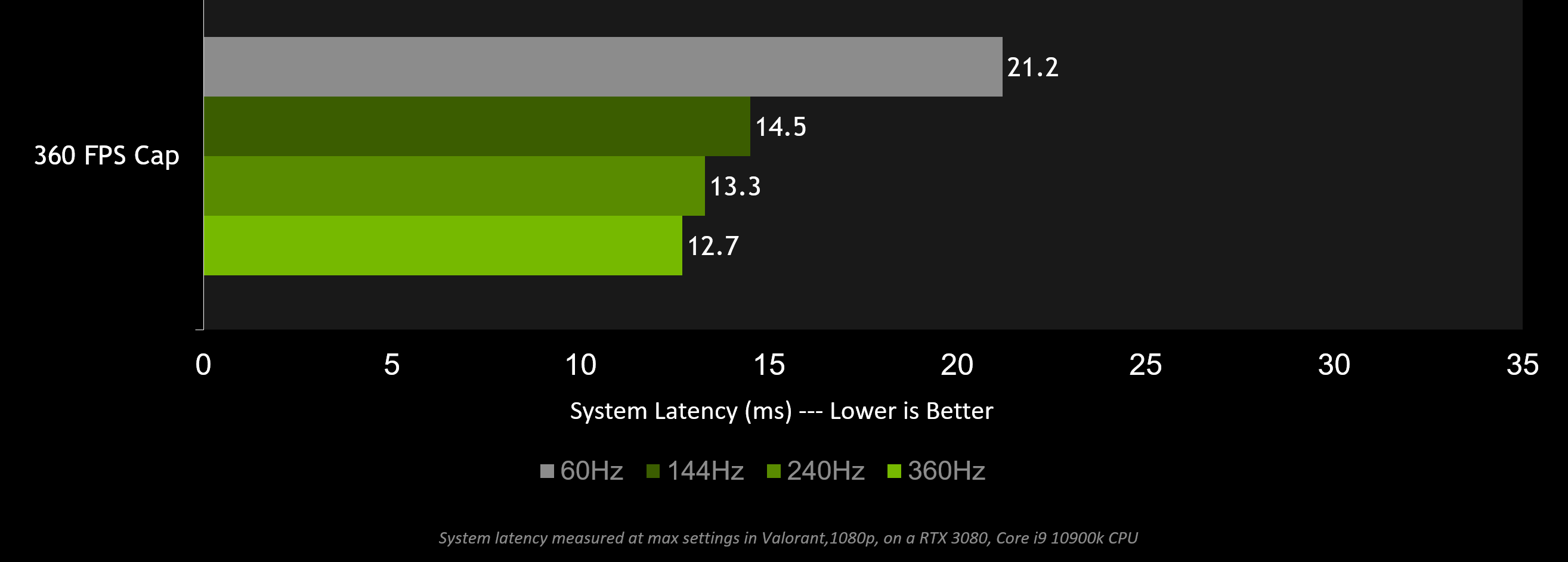

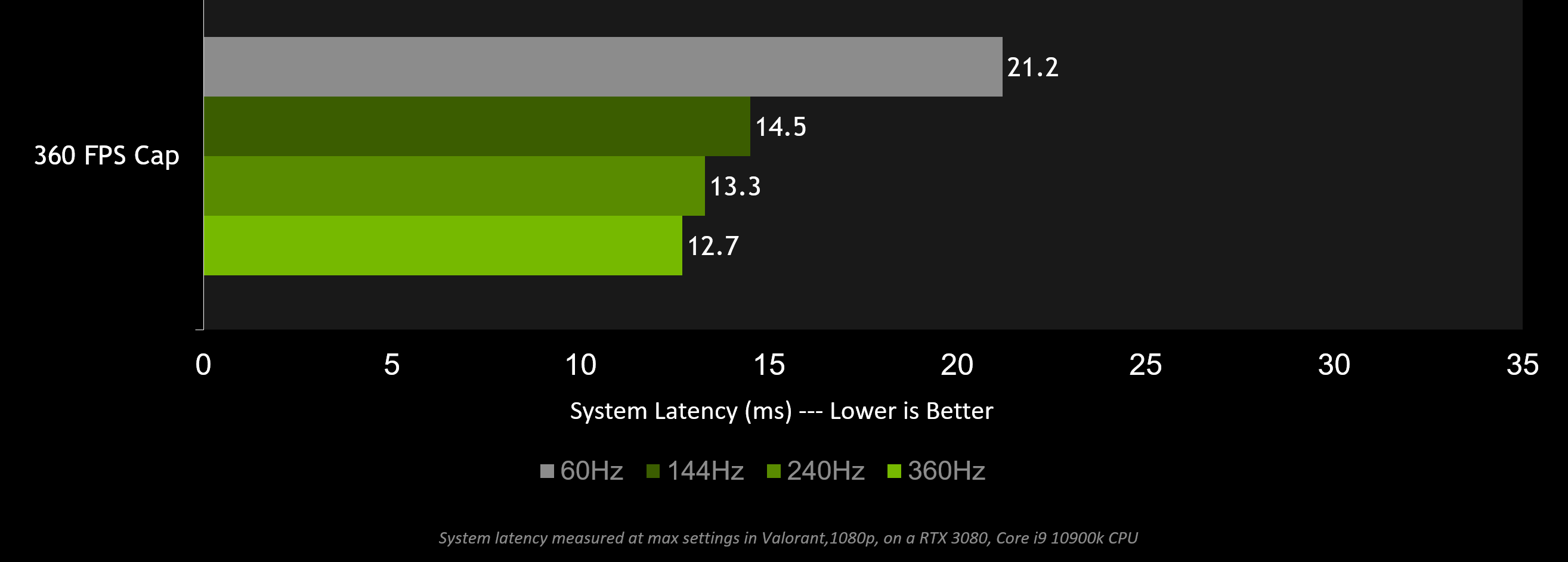

I couldn’t find that graph to see what they are referring to but I did find graphs on nvidia’s website comparing system latency as a whole, which I think you’d appreciate.

Going from 60 fps to 360 fps cuts the system latency by 8 Ms, according to Nvidia’s test on the Nvidia Reflex page. So that is a direct comparison of what fps does to the entire latency, correct? Going from 60 to 360 fps cuts the entire response time by 0.008 seconds. Right?

Are you talking about this?

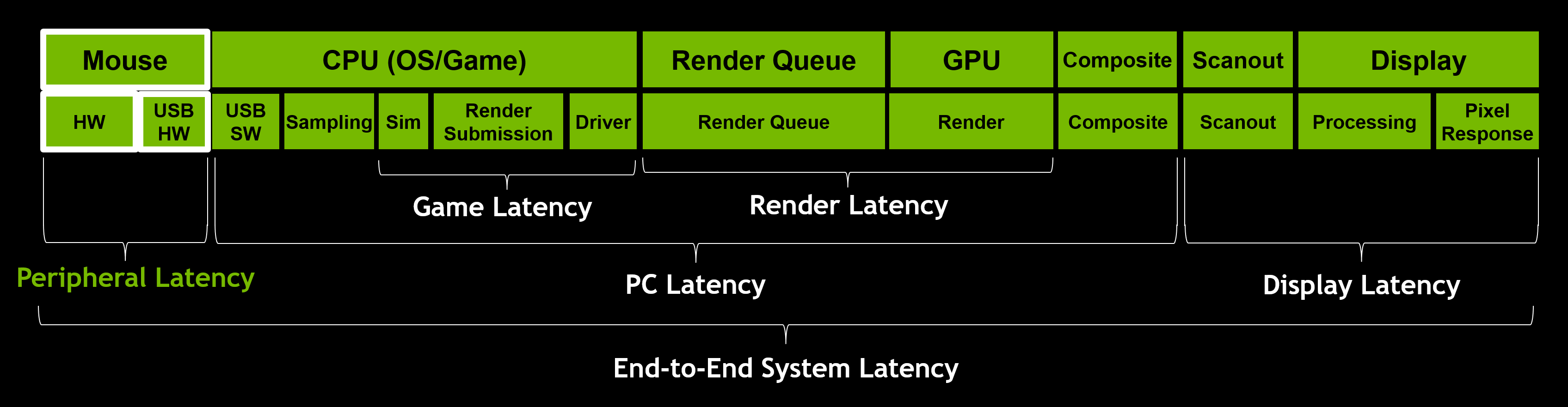

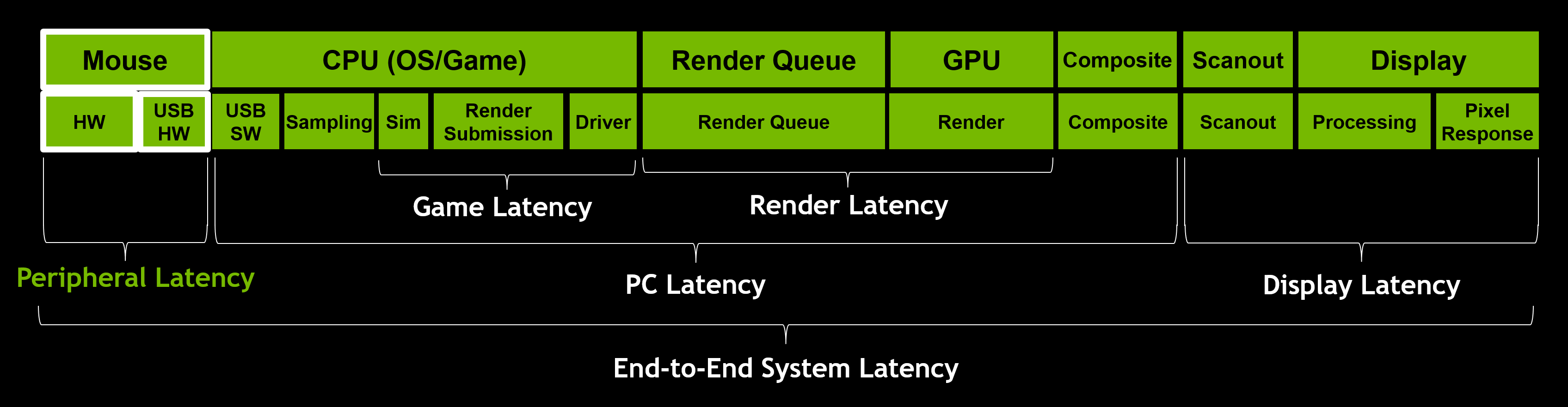

This is just display latency. It shows how at a fixed framerate, 360fps, and everything else being equal (this is shown at the bottom), monitor refresh rate affects total system latency.

You’re out of your depth here, clearly, and I’ve provided you with an enormous amount of information. Your inability to process it is not my fault.

Anyway, your assessment of total system latency is categorically incorrect, and I’ve proven that beyond any shadow of a doubt.

2 Likes

No, clearly that is not what I’m talking about. It doesn’t even compare 60 vs360 lol. Why would I use that graph to compare fps. Obviously that graph doesn’t show that haha

So you can’t do the math.

You show me a video that talks about system latency as a whole, does not go into anything technical, and shows literally Nvidia slides.

Then you profess this evidence is satisfactory even though you can’t even defend one lien of scrutiny towards it.

Then you insult my intelligence by assuming I am out of my depth.

I can understand data, math, and statistics just fine. You’ve provided me with none of those things.

I just found an actual study on google by Jansen, et el. It’s from 2009 but the authors concluded that the difference in number of moving objects the study participants were able to click on when comparing 45 to 60 fps was insignificant. They did not reject the null hypothesis

Id imagine 120 -240 would be even more meaningless but I’d be interested for you to educate me more on this topic.

Edit: it’s the graph 2 above the one you somehow thought I was referring to. A 60hz rr compared to a 360 rr is basically meaningless according to Nvidia. 0.008 seconds difference in system latency

It is blatantly obvious you don’t understand anything that has been presented to you. You’re just hand-waving it away.

This is the crap I’m talking about. You’re contradicting yourself constantly and spouting nonsense. It’s an indisputable fact that increasing framerate lowers end-to-end system latency beyond just display latency. Even if we were just talking about the refresh portion of display latency, going from 60 to 360fps is a reduction of 13.98ms. If that page was only talking about the reduction of PC latency, then you’d have to add the two together, in which case the total reduction would be 21.98ms, and this is actually representative of what that kind of fps jump would entail in a very well optimized game that is using reflex.

The video I posted first gives a GPU bound example where the CPU render is 3ms, the GPU render is 10ms, but because the render queue is full, the CPU has to wait so it’s actually 10ms as well. That’s 20ms of latency before the display has even received any information, which is double the 10ms that 100fps would have on a 100hz monitor if there weren’t any PC latency.

It’s not actually all that complicated, but you’re just not getting it.

3 Likes

We aren’t talking about cpu bound vs gpu bound “bottle necks”.

All things being equal, increasing fps from 120 to 240 is such a small difference in latency that it is meaningless to me.

Here I’ll put it in a way you’ll understand:

It’s so insanely minuscule that it’s a logical disaster to try to comprehend how shallow your argument is so far.

Even if there is no bottleneck, like I said in the previous post, going from 60 to 360fps is going to be over 20ms total even under a best case scenario where you’re not getting much benefit because the render queue was already empty in every circumstance.

You actually read that and believed the total end-to-end system latency reduction would be 8ms when just using display latency, which until I pointed our your error you thought was the same as end-to-end latency, would be 13.98ms, meaning a mere 8ms reduction is physically impossible.

1 Like

I’m telling you what Nvidia reported. And what the study authors reported.

End to end system latency is what they are reporting. They define it in the section above it

That graph contradicts everything you are saying lol

Going from a 60 hz rr to 360 rr decreases latency by 40%…but that’s super misleading since 8 Ms is nothing

Maybe you can email Nvidia and tell them they are wrong?

Based on your inability to grasp the topic at hand, I don’t take your presentation of this information particularly seriously, and as I can do the math (and literally just did), it’s physically impossible to only get an 8ms reduction going from 60 to 360fps because just the display portion of the end-to-end latency chain is more than that. (1000/60)-(1000/360) = 13.89. That’s the minimum latency difference assuming the rest of process defied physics and happened totally instantaneously, which of course it doesn’t. So, in reality, you then have to add that latency to the total, which is probably what you were reading was talking about, so 13.89 + 8.

Saying you were out of your depth was me being nice.

Post the graph. Post the article. You’re so full of it.

1 Like

LOL

Looks at Nvidia data

“Well I don’t believe it!”

Google “fps affect on latency” and it’s like the 1st or 2nd link. I gave you the study authors name to confirm.

The graph I am talking about is the same Nvidia webpage you are on. It’s 2 graphs above the one you thought I was talking about for some dumb reason. It’s data

I’m the only one who has posted any data. You couldn’t understand any of it, and now you’re saying you have different data that you can understand but refuse to provide.

I’ve also fully explained how what you’re suggesting is physically impossible.

1 Like

That’s literally just showing the difference exclusive fullscreen is making versus windowed mode at a given framerate. In the image I linked earlier, and which I will link again, it’s the “composite” portion of the end-to-end latency. Exclusive fullscreen eliminates this from the chain entirely.

And by the way, we are referring to the same article.

1 Like

It’s not literally just showing that lol

It is showing 60 vs 360 by tangent. You can compare the 2 by looking at it. All the other variables are the same

Yes, I know we are referring to the same article. I literally said it’s 2 graphs above the one you thought I was talking about even though it made no sense to assume that

That’s not end-to-end system latency, it’s PC latency. They did label that badly, granted.

So, my original assumption was right, and all the math that followed. The difference between those two frame rates in reality is 21.98ms, assuming the mouse/display are identical.

1 Like

End to end system latency = system latency. There is no reason to think other wise.

They give you the variables below the graph. Everything is the same. Going from 60 to 360 will save you 0.008 seconds in system latency. Or as you would put it, “disastrous “

System is synonymous with computer in many cases. If they meant end-to-end, they would have said end-to-end. It is a bad label though.

It’s also physically impossible for this to be the case. I have provided the math you so desperately crave and an explanation as to why it is the way it is. I’ve also provided you a graph that is ACTUALLY end-to-end system latency between framerates, and you’re ignoring it entirely.

I’m not gonna lie, I don’t know what graph you speak of. Send again

They did say system latency! What else could they mean by that? Even if they meant Pc latency only, the only thing left is peripherals which I am not debating about. I also have a really hard time believing they are presenting a refresh rate and NOT talking about display latency, too. Hence, end to end system latency

Ignore the accuracy portion. It’s the x-axis we care about. These values vary based on the game, but that’s a lot more representative of the actual latency reduction these framerates make when measuring total end-to-end system latency.

A latency reduction of 21.98ms from 60 to 360fps would be expected in a game that has a highly optimized game engine and is using exclusive fullscreen and reflex mode. It’s usually going to be a lot more than that. It is physically impossible for it to be just 8ms because the display portion of end-to-end system latency between 60 and 360hz is more than 8ms.

Edit: So, I actually checked out the research paper and the presentation slides, and yes, they are omitting the display portion of latency from a lot of those charts unless they specify total end-to-end system latency which must include all parts of the chain. Again, I knew this had to be the case because as I’ve said many times, it’s physically impossible for an fps jump between 60 and 360 to only be 8ms if we take the display into account as well.

Note that it explicitly says input+render and omits display.