60-70 is an acceptable frame rate by any non hyper competitive standard. 130 fps is smooth as butter.

I’m just saying my experience appears to drastically differ from yours

What fps game with similar graphical fidelity of Darktide are you comparing to?

1 Like

Without DLSS, take your pick. Gears 5 stomps all over Darktide from a technical perspective, and it didn’t need scaling to achieve it.

I’d say even games from the mid to late 2010s look better than Darktide without DLSS. Things like MGSV and Doom 2016. Doom Eternal is lightyears ahead.

And for the record, 60fps is absolutely terrible for a shooter. That is the bare minimum for the game to be considered even remotely playable at the most casual level. Decent frame rates for a shooter start at 240.

Low average framerates are not Darktide’s biggest performance problem either. The frame time consistency is really poor and this hurts moment to moment gameplay even more than a low average.

1 Like

I think Darktide has better graphics than all those games you listed at max settings.

Gear 5 and mgs5 are third person shooter?

None of those games have the amount of enemies on at once AND the gore levels that DT has while keeping things looking as good as Darktide.

I think we will have to just disagree about frame rates. I played DT at 40 fps and it sucks. I played it at 70 fps and it was great. I noticed it being smoother at 130 fps when I got my 4070 Ti but I wouldn’t consider it significantly better than the 70 fps in any meaningful way. 240 fps being “decent” sounds ridiculous to me. I actually intentionally limit my games to 140 fps even if they go higher since I honestly don’t even notice a difference

1 Like

The fact that they added silent bursters in a patch and then just didn’t fix it for months is so Fatshark…

Please god, if you can’t fix it, can we just go back to silent crushers? I’d love to play the game again too, for as mixed a bag as it is.

2 Likes

The base assets are objectively worse, and the relative performance without DLSS is astonishingly worse. The game on 1080p ultra without Ray tracing looks like something out of 2010, and the game runs less than 200fps most of the time. I can play Gears 5 with every setting maxed at 1080p and get well in excess of 300fps. If I selectively lower some settings, over 500fps.

Selectively lowering Darktide settings barely does anything. If I lower texture quality, global prebaked lighting, and totally disable AO, it barely makes a difference.

I like Darktide’s core gameplay, but it’s a technical disaster that relies on upscaling as a crutch. We haven’t gotten into the fact that the game is an unstable mess that frequently crashes on different configurations. Fortunately, my personal experience hasn’t been too bad in this area, but I know plenty of people where this is still a major problem to this day.

3 Likes

I think you really like hyperbole lol

Let’s just agree to disagree on what is suitable for graphics/fps

What are you taking as hyperbole? The numbers I gave were all accurate, and Darktide’s framerate does not drastically improve when lowering basic settings like texture quality and AO on a high end system. The only settings that make a big difference are overall resolution, ray tracing, and most importantly, DLSS.

As far as serious bugs and crashing are concerned, I have one friend who dropped the game shortly after launch because he could not complete 9/10 runs without a crash, and another who usually still gets one crash per session and encounters serious visual and audio bugs on the regular.

The only thing that is subjective is minimum acceptable framerate for shooters. I hate to break it to you, but anybody playing anything even remotely seriously, PVP or PVE, is going to want an incredibly high framerate in a shooter. A higher framerate not only makes tracking a moving target much easier, it also massively increases responsiveness. This increase in responsiveness continues even after your in-game fps far exceeds your monitor’s maximum refresh rate.

1 Like

“Disaster” and other exaggerations you use to describe things are heyperbole.

And I’d hate your break it to you, but you can do the math on fps over 120-140 fps and the latency you reduce by gaining fps is minimal and there is a huge diminishing return past that.

Unless you are playing competitively for money where every 0.001 seconds of reaction times counts…who cares

1 Like

If the game looking and performing worse than games nearly a decade older than it, on top of serious stability issues that still have yet to be totally resolved isn’t a technical disaster, nothing is.

The returns are diminishing, but not insignificant. The difference between 120fps and 240fps is nowhere as large as 60 to 120, but it’s still 4ms in display lag, and anywhere between 20-40ms in total system latency depending on the pipeline.

If you’re playing a shooter competitively at all and you aren’t trying to get your frame rate as high as reasonably possible, you’re doing it wrong. Aiming with a mouse is different than simply reacting to something with a binary button press. It’s massively improved by reductions in end-to-end system lag, and end-to-end system lag is disproportionately affected by your framerate. The part of that total lag you’re referring to is the display lag, and that’s actually a relatively small portion of the total.

1 Like

Going from 144 fps to 240 fps saves you roughly 2.5 ms in input lag (doubt check my math).

That is 0.0025 seconds faster.

The average reaction time is around 0.2 seconds.

Think about that. I certainly don’t notice a thing but maybe you do

You’re misinformed.

You’re referring to display latency, which is a tiny portion of end-to-end system latency. It occurs at the very end of the process. Going from 144fps to 240fps saves double digit ms, minimum, often times more. It does vary a bit game-by-game because the pipeline is not identical between different engines.

2 Likes

Then inform me. Show me the math

After watching that, I can conclude there are several different variables contributing to total latency, one of which is fps that we are talking about. I don’t know what you are talking about still

And that CS pro would benefit from fastest fps…he makes money doing this. I can get that

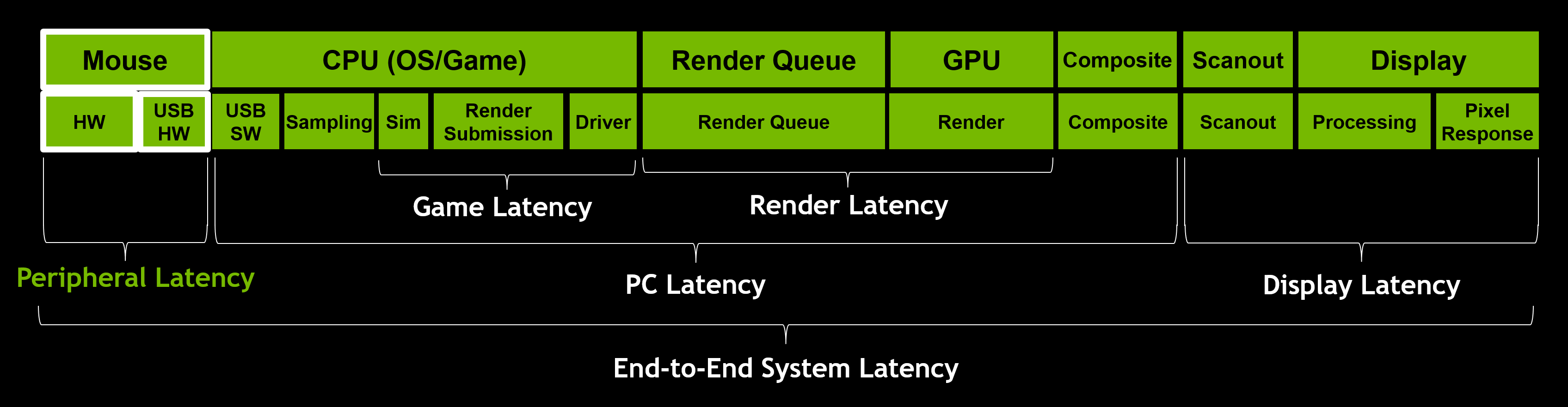

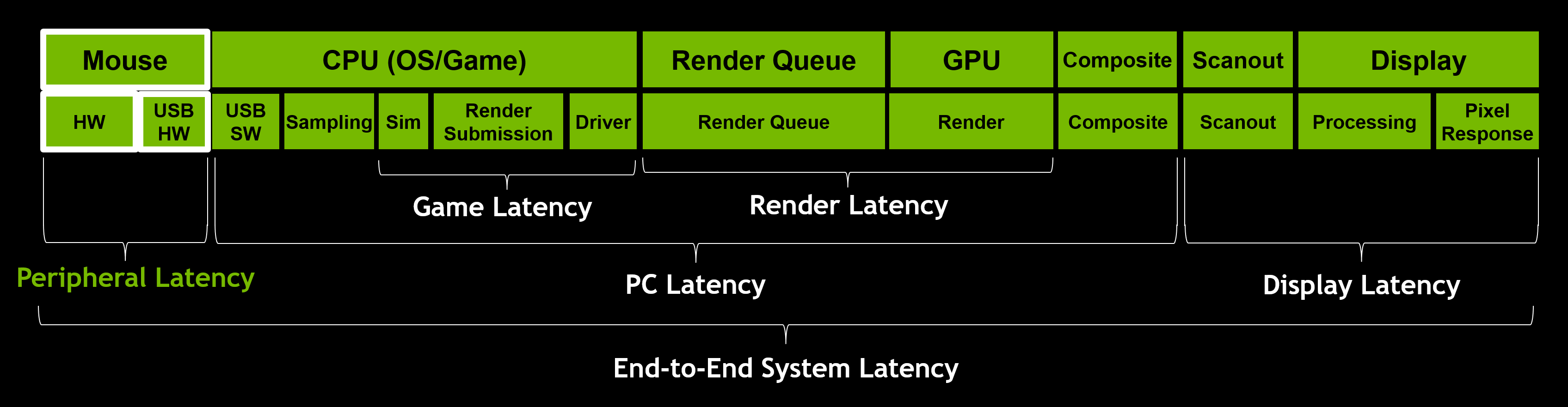

This graphic is provided by Nvidia and it’s used and explained in the video I linked.

Long story short, end-to-end latency is determined by the peripheral latency, aka your mouse and keyboard, the PC latency which is a combination of the hardware and software you’re running, and the display latency. The graphic showing the PC latency as most of the bar is not arbitrary, because it is in fact the biggest contributor to total end-to-end system latency. Frame rate is not the only thing that determines PC latency, but it is one of the most significant, and everything else being equal (same game, same hardware, same settings), it will be, by far, the single largest determining factor for end-to-end system latency.

1 Like

Yea I understood that but asked for math to your 120-240 fps claim of being significant. As far as I know, putting your fps to 240 from 140 will save you 0.0025 in time, all else being equal

Then you’re not understanding the information I provided.

Going from 144 fps to 240fps will save you 2.77ms off of your display latency, but far, far more than that under PC latency because of how the CPU and GPU process frames, and where in the pipeline input can actually be accepted in time for the next frame.

It’s not a fixed number though, as it varies game-to-game, and even within the same game depending on settings like reflex.

2 Likes

Not that I don’t believe you, but if you are so sure about this then I’m sure you can just do simple math so my little brain can understand how 0.0025ms shaves off of can compound other latency

1 Like

Not only are you only grasping display latency, you’re only grasping the refresh latency of the display. If you’re using a good display, this will be the bulk of it, but technically there is also display processing latency and pixel response, so even that 2.77ms number is a theoretical difference that will actually be slightly more than that between two different 240hz displays.

That GamersNexus video goes into details and does the math in specific examples showing the difference each part of the process makes.

Here’s a chart that shows how drastic a difference framerate makes on latency. But again, the exact figures will vary based on game, settings, and hardware even at a given framerate.

The scenario you’re presenting where you only take into account the refresh portion of display latency is one where every other part of the pipeline happens literally instantaneously.

3 Likes

We aren’t talking about the other processes though. Just fps increase from having a better graphics card or turning down settings.

% aiming improvement is relative to what? What does that mean? How did they measure it? Is 37% increase meaningful?

![]()